AI Improves Decisions

But Security Is Still Judged by Outcomes

AI has materially improved how cybersecurity teams operate. Automated analysis shortens detection time, reduces manual effort, and accelerates response. These gains are real and widely observed.

What has not changed is how security success is measured. Incidents are still judged by outcomes: operational disruption, data exposure, regulatory impact, and recovery cost. Faster decisions alone do not guarantee safer systems.

What AI Does Not Change Yet Is Accountability

As automation expands, a clear boundary remains. Decision-making can be delegated to machines; responsibility cannot. Governance models, regulatory frameworks, and risk ownership continue to assume organizational accountability.

This remains true today and is unlikely to change in the near term. When automated actions disrupt services or expose risk, accountability stays with the organization, regardless of how autonomous the decision path was.

This gap between decision speed and responsibility is often understated in AI-first narratives.

Breaches Escalate Because Control Breaks

Long-term breach analysis is consistent. The Verizon Data Breach Investigations Report repeatedly shows the human element involved in a majority of incidents—error, misuse, or compromised credentials. These incidents rarely result from a lack of intelligence.

From another angle, Gartner has observed that most cloud security failures stem from misconfiguration and misuse rather than detection gaps. Organizations often know where risk exists but lack mechanisms to enforce appropriate access as conditions change.

The common failure is not blindness. It is loss of control after access is granted.

Where Risk Actually Grows

Security incidents rarely escalate at the moment a threat is detected. They escalate after trust has already been established and left unchecked.

Typical escalation patterns are predictable:

- Access approved once and never revalidated

- Legitimate users, devices, or workloads changing behavior without intervention

- Automated actions that cannot be precisely limited or quickly reversed

Speed amplifies the problem. CrowdStrike reports adversary breakout times measured in minutes, sometimes seconds. At that pace, delayed or advisory controls are structurally insufficient.

Risk grows along the paths where systems interact: network entry, session continuity, and endpoint activity.

Why Visibility and Control Enable AI

This is not an argument against AI. It is an argument for grounding AI in observable, enforceable reality.

As AI-driven automation accelerates, decisions are made faster and at greater scale. Without visibility, organizations cannot explain or validate those decisions. Without control, they cannot constrain their impact. Both gaps increase risk rather than reduce it.

Visibility provides factual context: who or what is operating, where access exists, and how behavior changes over time. Control ensures that this context can be acted upon—by limiting access, reducing blast radius, and enabling reversibility when conditions shift.

AI depends on both.

- Without visibility, AI operates on fragmented signals.

- Without control, AI actions become disruptive.

Autonomy Changes Speed, Not Responsibility

As AI systems become more autonomous, the operating model changes. Actions are triggered automatically, and human involvement shifts from execution to oversight.

What autonomy does not change is responsibility.

Even when actions are taken autonomously, accountability for outcomes remains with the organization. Regulatory, audit, and risk frameworks do not recognize autonomous systems as accountable actors.

This creates a practical constraint. The more autonomous an action becomes, the more important it is that execution occurs within visible, bounded, and reversible environments.

- Autonomy without visibility removes explainability.

- Autonomy without control removes accountability.

In security, autonomy must operate within clearly defined gates.

The Gatekeeper Function

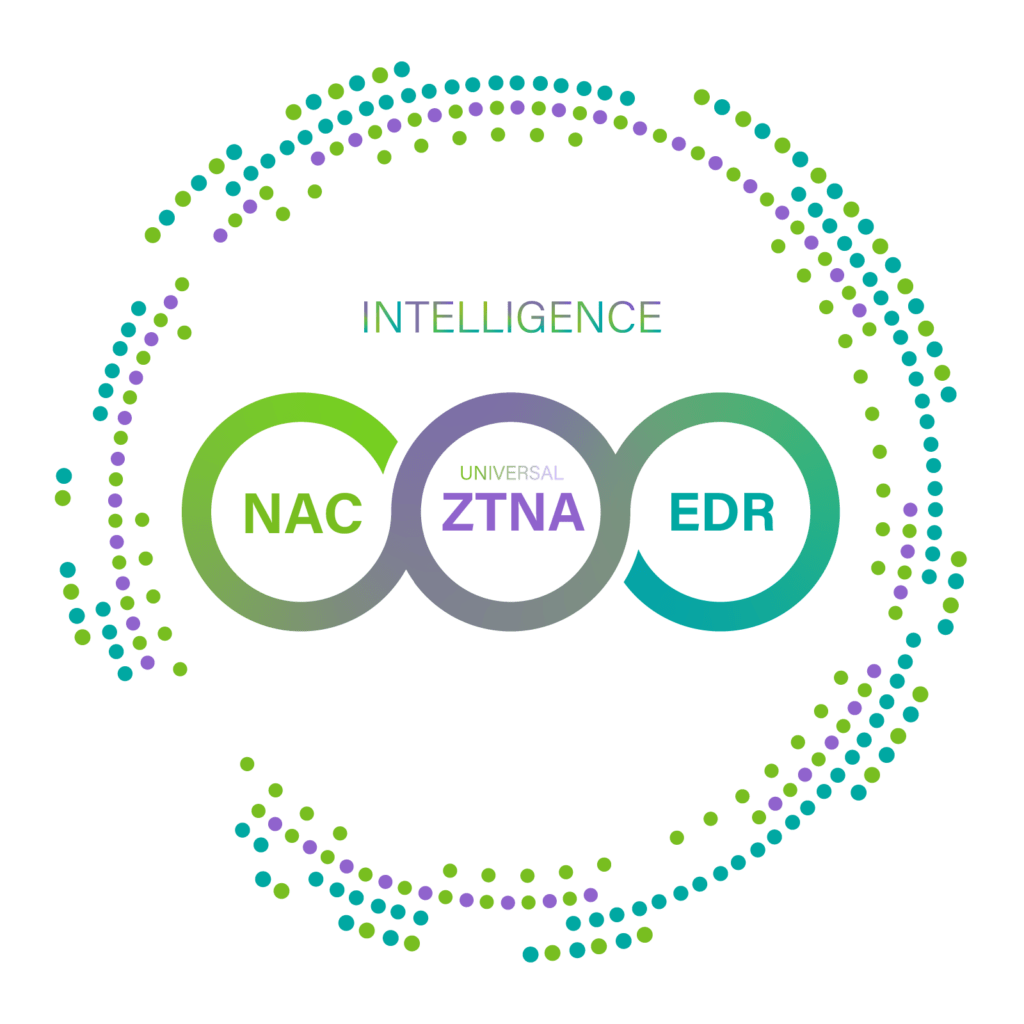

This is where Network Access Control (NAC), Zero Trust Network Access (ZTNA), and Endpoint Detection and Response (EDR) fit structurally.

- NAC governs who or what is allowed to connect at entry.

- ZTNA continuously validates what remains accessible as context evolves.

- EDR monitors runtime behavior after access is granted.

Together, these technologies form a continuous enforcement structure aligned with how incidents actually unfold. Their role is operational: ensuring access remains appropriate and deviations can be contained and reversed.

Conclusion

The question is no longer whether AI belongs in cybersecurity. It already does. The more relevant question is how AI-driven and autonomous decisions are grounded in enforceable, observable reality.

- AI may dominate the alley with speed and intelligence.

- Visibility shows what is happening.

- The gatekeeper controls the roads.

In cybersecurity, control of the roads determines what actually happens.